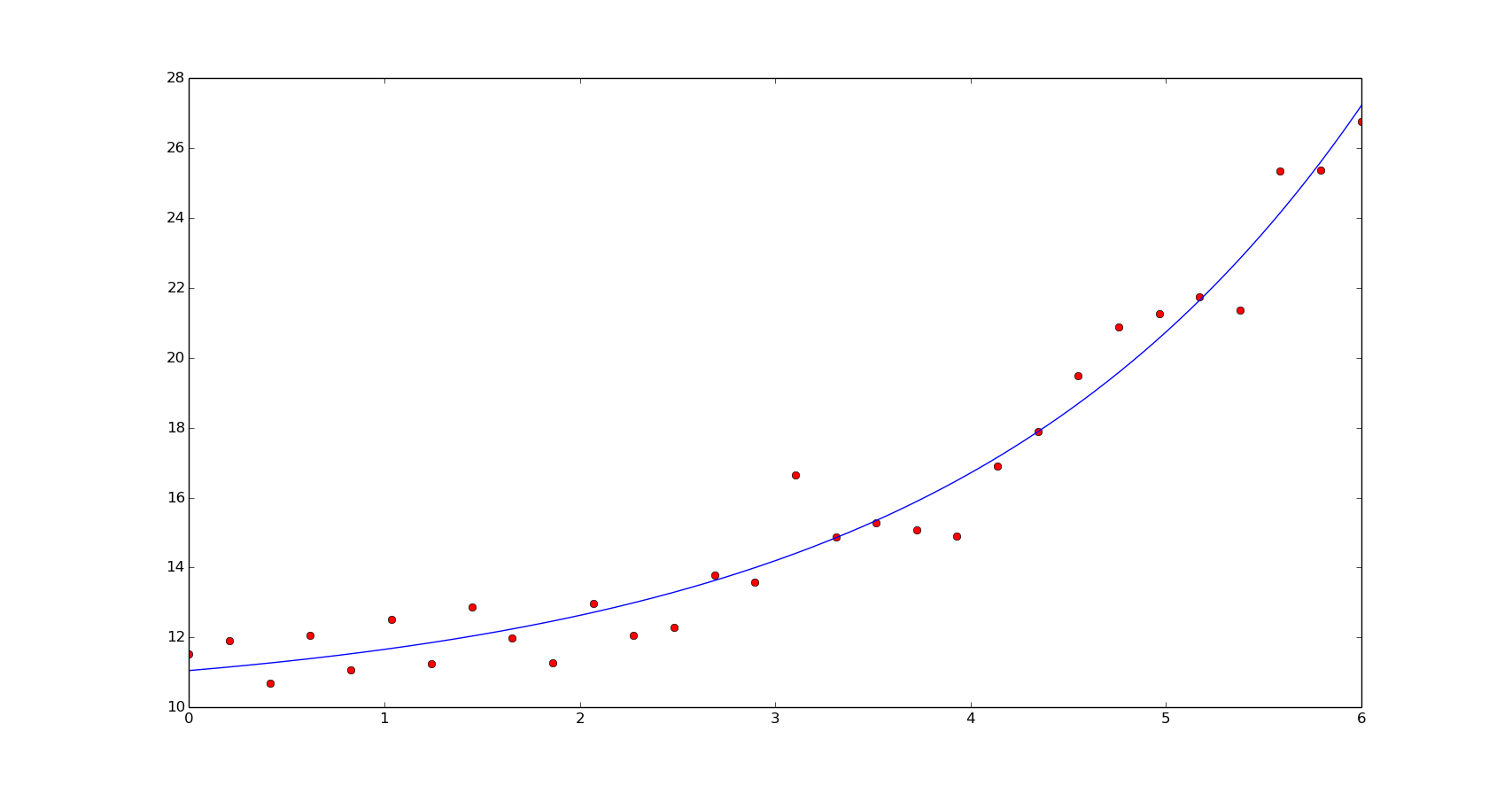

Total running time of the script: ( 0 minutes 0. scatter ( X_train, y_train, s = 30, c = "red", marker = "+", zorder = 10 ) ax. predict ( X_test ), linewidth = 2, color = "blue" ) ax. scatter ( this_X, y_train, s = 3, c = "gray", marker = "o", zorder = 10 ) clf. ¶ curvefit is part of scipy.optimize and a wrapper for that overcomes its poor usability. A Parameter is the quantity to be optimized in all minimization problems, replacing the plain floating point number used in the optimization routines from scipy.optimize. normal ( size = ( 2, 1 )) + X_train clf. For non-Gaussian data noise, least squares is just a recipe (usually) without any probabilistic interpretation (no uncertainty estimates). This chapter describes the Parameter object, which is a key concept of lmfit. subplots ( figsize = ( 4, 3 )) for _ in range ( 6 ): this_X = 0.1 * np. Ridge ( alpha = 0.1 ) ) for name, clf in classifiers. Curvefit use non-linear least squares to fit a function, f, to data. All we have to do is import the package, define the function of which we want to optimize the parameters, and let the package do the magic. Do a least square fit on this new data set. Create a new data set by adding multiple copies of each data point, corresponding to the above integer.

LinearRegression (), ridge = linear_model. Least square problems, minimizing the norm of a vector function, have a specific structure that can be used in the LevenbergMarquardt algorithm implemented in (). In this situation we can make use of handy function from scipy.optimize called curvefit. Method 1: - Create an integer weighting, but inverting the errors (1/error), multiplying by some suitable constant, and rounding to the nearest integer. seed ( 0 ) classifiers = dict ( ols = linear_model. NumPy and SciPy must be installed, on the server, to a separate version of Python 2. The equation may be under-, well-, or over-determined (i.e., the number of linearly independent rows of a can be less than, equal to, or.

Computes the vector x that approximatively solves the equation a x b.

#SCIPY LEAST SQUARES CODE#

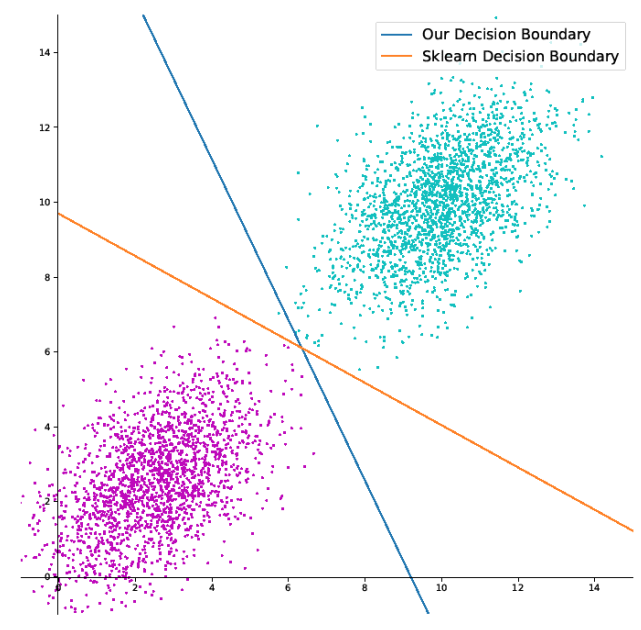

# Code source: Gaël Varoquaux # Modified for documentation by Jaques Grobler # License: BSD 3 clause import numpy as np import matplotlib.pyplot as plt from sklearn import linear_model X_train = np. The Partial Least Squares Regression procedure estimates partial least squares (PLS, also known as 'projection to latent structure') regression models. Return the least-squares solution to a linear matrix equation.

0 kommentar(er)

0 kommentar(er)